In our previous article, we explored how Copilot Studio makes it possible to build AI agents quickly with low-code tools. For many enterprises, this is the right starting point for proofs of concept, internal copilots, or customer-facing chat solutions that can be designed and deployed without requiring full engineering teams.

But as projects grow in scope and business units begin to rely on these solutions, the limitations of low-code environments become more visible. Enterprises may need advanced ai model orchestration, tighter governance, or custom integrations that go beyond what Copilot Studio was designed for. At that point, Azure AI Foundry enters the picture as a comprehensive, secure platform for designing, deploying, and managing ai apps and agents, with integration to developer tools, models, and scalability for both enterprise and startup needs.

This article examines when it makes sense to move from Copilot Studio to Azure AI Foundry and what enterprises should have in place before making the shift. The goal is to help CTOs and technical decision-makers evaluate whether their AI journey requires moving from a low-code foundation to a full-code, enterprise-grade platform by assessing your specific use case to determine if migration is needed.

Most enterprises begin their AI journey with Copilot Studio because it lowers the barrier to entry. It’s a low-code environment designed to let teams create conversational agents and copilots without building everything from scratch.

These traits make Copilot Studio a natural choice for:

By design, Copilot Studio covers the first phase of AI adoption which means getting copilots into the hands of users quickly and proving their business value. The trade-off is that flexibility and control are limited, which is where Azure AI Foundry comes into play.

While Copilot Studio can take you far, there are clear signals that your enterprise may have outgrown a purely low-code environment. These often emerge as AI moves from experimentation to mission-critical operations.

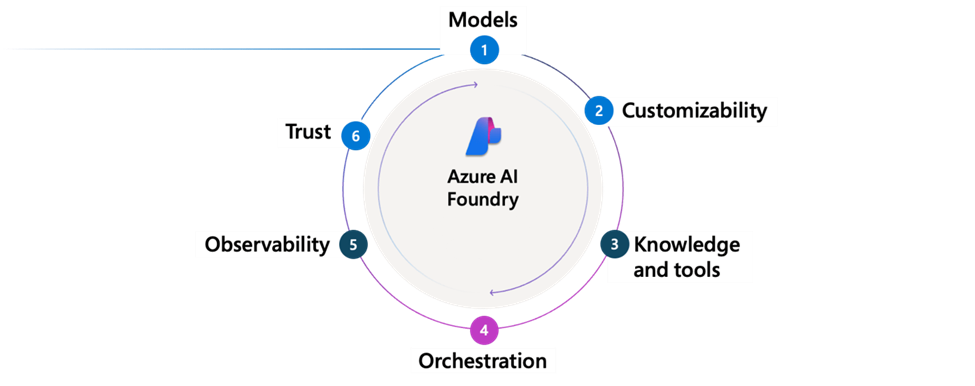

If your copilots are evolving beyond FAQs or task automation into multi-step reasoning, decision workflows, or integration with proprietary systems, Copilot Studio alone may not be enough. Building agents with advanced capabilities requires the orchestration features of Azure AI Foundry, which enables orchestration across multiple models, agent pipelines, and external APIs with full developer control.

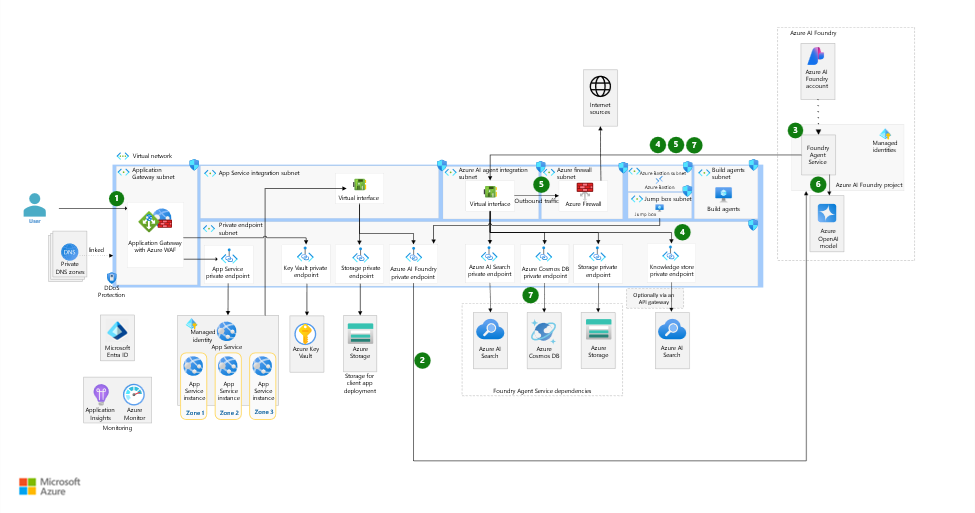

Chat Reference Architecture Example – Azure AI Foundry. Source: https://learn.microsoft.com/en-us/azure/architecture/ai-ml/architecture/baseline-azure-ai-foundry-chat

Copilot Studio gives you access to pre-trained models, but enterprises often require fine-tuning on domain-specific data or advanced RAG pipelines. Foundry allows you to bring in models from the Azure Model Catalog (OpenAI, Meta, Mistral, etc.) as well as access a wide range of models from various leading model providers, and customize them with your own data.

At scale, copilots must meet strict compliance, monitoring, and security standards. Foundry projects support role-based access control (RBAC), robust access controls for managing permissions and securing AI projects, network isolation, telemetry, and detailed traceability capabilities that are critical for regulated industries like finance, healthcare, and insurance.

When copilots need to plug into data pipelines, ML workflows, or analytics platforms, Foundry provides first-class integration with Azure Machine Learning, AI Search, Semantic Kernel, and SDKs in Python, .NET, and JavaScript, along with developer tools that streamline the integration process.

If copilots are being adopted enterprise-wide, the operational load increases. While the simple setup of initial pilots allows teams to quickly get started, scaling to enterprise-wide deployment introduces more complex requirements. Foundry’s observability, environment isolation, and project-level governance help prevent bottlenecks and allow enterprises to manage AI as part of their core infrastructure.

Enterprises that want to stay ahead of model innovation benefit from Foundry’s expanding model catalog including OpenAI GPT, and also Grok (xAI), DeepSeek, and other third-party models. Copilot Studio is designed for accessibility; Foundry is designed for flexibility and longevity.

In short, if your copilots are mission-critical, multi-model, or compliance-sensitive, it’s time to consider migrating to Azure AI Foundry.

Shifting from Copilot Studio to Azure AI Foundry isn’t as easy as flipping a switch. It’s a move from a low-code sandbox into an engineering-grade AI platform. Before taking that step, enterprises need to make sure a few fundamentals are in place. Integrating with Microsoft Fabric is also crucial for comprehensive data management and seamless workflow integration when preparing for Foundry.

If your Azure environment isn’t already well-governed, Foundry will expose the gaps quickly. You’ll need resource policies, cost controls, and RBAC structures ready to handle multi-team workloads. Think of this as cloud hygiene that saves you from security and budget surprises later.

Copilot Studio works fine with structured sources and connectors, but Foundry assumes you can handle bigger and messier data. That means having pipelines for ingestion, tools for governance (Purview, Fabric OneLake), and secure storage that supports RAG or fine-tuning scenarios, including unstructured data types such as images.

At the Foundry level, copilots are no longer “experiments.” You’ll be expected to enforce enterprise-grade security such as network isolation, encryption, key vaults, audit trails.

Low-code opens the door to business users, but Foundry is developer territory. You’ll want engineers who know Azure SDKs and are proficient in modern languages, data scientists who can train or fine-tune models, and MLOps specialists who keep everything running in production. Not every role is required for a PoC, but for enterprise adoption you’ll need depth on the bench.

Foundry can scale, but so can your costs if you’re not paying attention. Fine-tuned models and high-volume inference add up quickly. Before you jump, make sure you have FinOps practices in place such as tagging, monitoring, and alerting to make sure that spend doesn’t spiral.

Finally, don’t move just because “Foundry is the next thing.” Know where you’re heading. Are you building internal enablers, leveraging enterprise knowledge to inform your AI strategy, customer-facing products, or cross-enterprise copilots? Have a roadmap for which use cases really justify the shift.

If you can tick most of these boxes, you’re in a strong position to get value from Azure AI Foundry rather than just more complexity.

The jump from Copilot Studio to Azure AI Foundry doesn’t need to be a big-bang migration. The best approach is a staged path where you validate at each step, gradually introducing more engineering-heavy components.

The Azure AI Foundry SDK plays a key role in this process, providing a unified API and streamlined tools that make migration and development faster and more reliable.

You don’t have to abandon Copilot Studio to start with Foundry. Many enterprises begin by connecting Copilot Studio agents to Azure AI Search for retrieval-augmented generation (RAG) or by exposing custom endpoints from Foundry-hosted models. This hybrid setup lets you test Foundry capabilities while keeping your low-code agents in production.

Set up small, isolated projects inside Foundry to experiment with:

At this stage, focus on high-value use cases where Foundry’s flexibility really matters such as: multi-step reasoning, custom RAG, or domain-specific copilots.

Run Copilot Studio and Foundry side by side. For example, keep customer-facing copilots in Copilot Studio for stability, but shift advanced prototypes or heavy-use internal agents to Foundry. Use shared services like Azure Monitor and Application Insights to compare performance and cost across environments, leveraging telemetry insights to monitor and optimize both systems.

Once your team is comfortable with the tooling and operational model, move end-to-end workflows into Foundry. This often includes:

Once you transition from Copilot Studio to Azure AI Foundry, the technical knobs get much more granular. That’s both the opportunity and the challenge because you gain control, but you’re also responsible for optimization.

Components of Azure AI Foundry. Source: https://learn.microsoft.com/en-us/azure/ai-foundry/agents/overview

Azure AI Foundry runs on a quota-based model. Each deployment has limits defined by tokens per minute, requests per minute, and requests per day. Costs depend on:

To keep costs in check:

Foundry enforces quotas at the resource and subscription level. When limits are reached, requests return 429 “Too Many Requests” errors and latency increases. To manage this:

Unlike Copilot Studio’s simplified logging, Foundry provides enterprise-grade observability:

Foundry introduces projects as the unit of isolation:

Operating in Foundry is closer to software engineering than low-code:

In short: Foundry gives you more control, but it also expects more engineering maturity. Cost efficiency comes from FinOps discipline, performance stability from quota planning, and compliance from project-level governance.

The move to Azure AI Foundry isn’t just about unlocking more technical features, and it’s more about positioning AI as a scalable, measurable investment. For CTOs, the question isn’t “can we build it?” but “does it deliver sustained value at enterprise scale?”

With Foundry, you gain fine-grained control over data, models, and user access. For industries like banking, insurance, and healthcare, this is often the deciding factor. You can enforce RBAC, audit interactions, and meet regulatory requirements without bolting on external systems.

Copilot Studio abstracts away infrastructure, but that also means limited visibility into how resources are consumed. Foundry, on the other hand, lets you monitor workloads at the level of models, prompts, and pipelines. Paired with FinOps practices, this visibility helps you prevent runaway costs and allocate spend to use cases that actually return value.

Foundry enables experimentation without compromising governance. New copilots can be prototyped, tested, and deployed under the same umbrella, shortening time-to-value for business units.

Where Copilot Studio is about accessibility, Foundry is about flexibility. Access to a growing catalog of models (OpenAI, Meta, Mistral, DeepSeek, xAI) ensures you aren’t locked into a single provider. This future-proofs your strategy and allows you to adapt as new models and standards (like MCP) emerge.

Enterprises moving workloads into Foundry often report ROI not from “one big win,” but from a portfolio of use cases such as customer service copilots, internal knowledge bots, financial analysis assistants, all governed and monitored under a single framework. IDC estimates returns of $3.70 for every $1 invested in generative AI, with some organizations reporting over 10x ROI when scaling the right use cases.

Copilot Studio is a strong starting point. It gets copilots into production quickly and demonstrates value without a heavy lift. But when your copilots need to handle complex workflows, integrate with enterprise data, or meet compliance and scale requirements, it’s time to look at Azure AI Foundry.

Foundry gives you the flexibility, governance, and control to run AI as part of your core infrastructure, and not just as a side project. The move requires more maturity across cloud, data, and engineering, but it also unlocks AI’s full enterprise potential.

If you’re reaching the limits of Copilot Studio, the next step is clear: start preparing for Foundry.

Schedule a call with our team of experts to discuss where you are today, where you want to go, and how to make the transition smooth and cost-effective.