Azure AI Search is Microsoft’s managed search platform designed for enterprise applications. It allows teams to ingest data from multiple sources, enrich it with AI, and make it discoverable through secure APIs or LLM-integrated workflows.

The service supports three core retrieval methods:

These capabilities can be combined into hybrid queries, making Azure AI Search a strong fit for knowledge portals, contextual AI assistants, and retrieval-augmented generation (RAG) pipelines at scale.

Azure AI Search can be implemented in three core stages: data ingestion, indexing and configuration, and querying and integration. Each stage is designed to give teams flexibility and control over how content is stored, enriched, and retrieved. This is the canonical pattern that Microsoft documents and expects teams to follow when building with Azure AI Search, as it aligns with how the platform is designed to operate.

1. Ingest and Enrich Data

2. Index and Configure Retrieval

Text search is performed using full text search capabilities, leveraging traditional keyword-based querying. Inverted indexes are used to efficiently retrieve relevant documents by storing processed tokens from documents for fast lookup.

3. Query and Integrate

Key Advantages of the Workflow

Getting started with Azure Search (now Azure AI Search) is a streamlined process that enables you to quickly create powerful search solutions for your data. Begin by creating a search service in the Azure portal, where you’ll select your preferred pricing tier and deployment region. The free tier is ideal for smaller projects, running tutorials, or experimenting with code samples, while the basic and standard tiers are designed to support larger projects and production workloads with enhanced features and capacity.

Once your search service is provisioned, you can use the Import Data wizard to connect to a supported data source, such as Azure Cosmos DB, Azure SQL Database, or Azure Blob Storage. This step allows you to create and populate a search index tailored to your data and use case. After your search index is set up, you can explore and test your search capabilities using the Search Explorer in the Azure portal, or integrate with your applications via REST APIs and Azure SDKs. This flexible setup process ensures that both small and large teams can create, manage, and scale their search solutions efficiently within the Azure ecosystem.

These scenarios are widely documented and recommended by Microsoft as standard applications for Azure AI Search. Azure AI Search supports premium features and is designed to run significant workloads for enterprise applications.

Index content from SharePoint, internal wikis, manuals, or PDFs to build a unified search interface for employees. Faceted navigation can be implemented to allow users to filter and refine search results by categories or attributes, enhancing the precision and usability of the search experience. This pattern supports RAG-based experiences and knowledge discovery.

Use Azure AI Search as the retriever in a RAG architecture by feeding contextually relevant chunks from your private data into Azure OpenAI prompts. This ensures accuracy, reduces hallucination, and keeps the model aligned with fresh knowledge. By leveraging large language models and generative AI, you can further enhance retrieval-augmented generation workflows, enabling more intelligent and context-aware information retrieval within your applications.

Enable semantic and vector search within customer-facing applications - ideal for catalog search, product discovery, or content lookup with intelligent filtering and relevance adjustments. Full text search and text search capabilities are also available for traditional keyword-based querying and filtering, supporting exact match filtering and relevance scoring.

Enable users to query by meaning, rather than exact keywords, across policies, legal documents, reports, or corporate archives using vector search and semantic capabilities. In addition to vector indexes, inverted indexes are used to efficiently retrieve relevant documents by storing and searching processed tokens from the text.

Enable support bots or knowledge assistants to pull information from indexed documentation, FAQs, and CRM knowledge bases to respond to queries or guide users enhanced by RAG patterns. These support bots and knowledge assistants are examples of search applications enabled by Azure AI Search.

While open-source search stacks like Elasticsearch or OpenSearch can be powerful, they require significant effort to manage, secure, and integrate, especially at enterprise scale. Azure AI Search addresses these challenges by providing a fully managed, enterprise-ready platform. The selected tier determines the capabilities, resource allocation, and scalability of Azure AI Search, making it suitable for different project sizes and requirements.

Additionally, Azure AI Search manages infrastructure in a way that certain tiers accommodate multiple subscribers. For example, the free tier shares system resources among multiple users, while higher tiers offer dedicated or scaled resources to support larger workloads and multiple subscribers.

Azure AI Search is fully managed, this means no servers or clusters to patch, and high availability is built-in. You scale capacity by adjusting partitions and replicas (Search Units) manually or via API; it doesn’t auto-scale in real time. Higher service tiers are designed to support significant workloads and enterprise-scale deployments. This removes much of the operational burden compared to open-source stacks like Elasticsearch or OpenSearch.

Vector search, semantic ranking, and native integration with Azure OpenAI Service simplify retrieval-augmented generation (RAG) use cases without external plugins or custom pipelines.

Supports encryption at rest and in transit, Microsoft Entra ID for RBAC, and Private Link for private networking. Fine-grained index and document-level security are built-in.

OCR, language detection, entity recognition, and other cognitive skills can be applied during ingestion using built-in skillsets, no need for separate ETL pipelines.

Azure AI Search is covered by Microsoft’s enterprise support and SLAs. It integrates with Azure Monitor, Defender for Cloud, and Azure Policy, so teams can enforce governance policies, track usage, and meet compliance requirements across cloud resources.

The platform supports hybrid queries that combine keyword, semantic, and vector search scores into a single relevance calculation. Reciprocal rank fusion can be used to merge the ranked results from these different search methods, improving overall relevance and reliability. This improves accuracy by leveraging both lexical matching and semantic similarity, and avoids the false negatives that can occur when relying solely on embeddings.

Beyond search, Azure AI Search works seamlessly with services like Blob Storage, SQL Database, Cosmos DB, and Azure AI services. This accelerates the development of full-stack solutions without building complex integrations or maintaining connectors yourself.

Pricing Overview (Comprehensive)

To create and manage Azure AI Search services, you must have an Azure subscription.

Azure AI Search pricing is determined by three main factors:

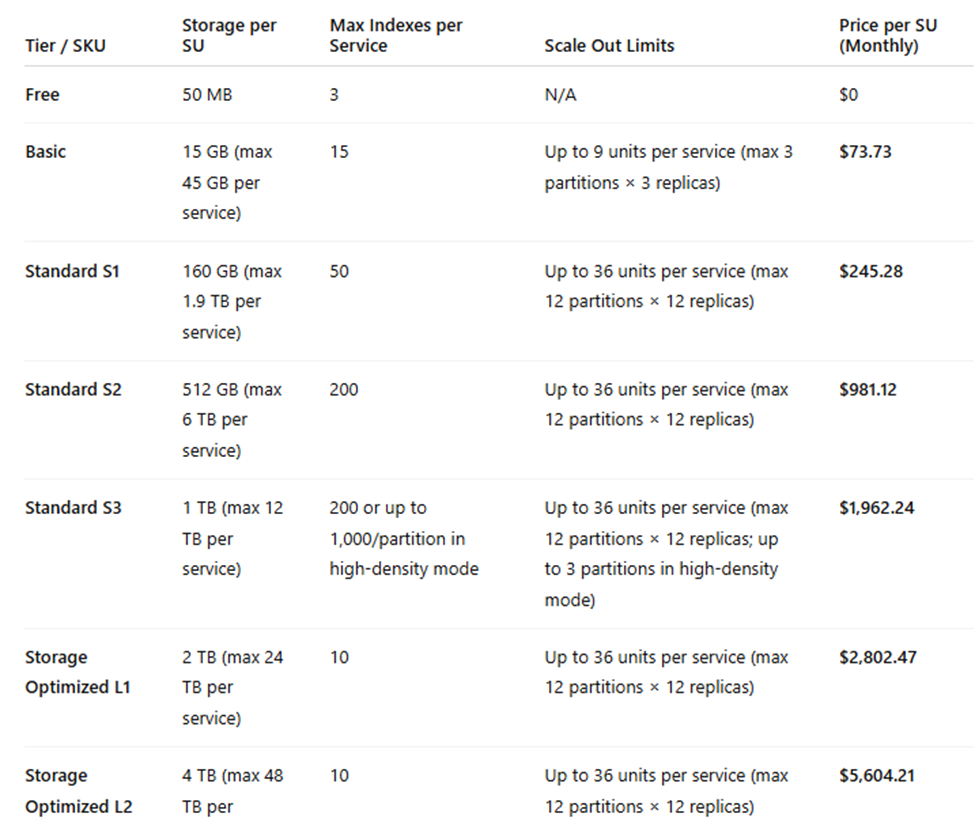

When creating a search service, you will need to select pricing tier on the select pricing tier page in the Azure portal. The available tiers include a free search service (also referred to as the free service), which is suitable for small projects, tutorials, or code samples. Note that only one free search service can be created per azure subscription, and it comes with resource limitations and cannot be scaled.

Premium features are only available in higher or paid tiers, so the free service does not include advanced capabilities or scalability options.

A Search Unit combines partitions (for storage) and replicas (for query load balancing). Your total cost is SU cost × number of partitions × number of replicas.

AI enrichment, such as OCR, entity recognition, translation, or image extraction, is billed separately from SUs and depends on usage:

These figures use Microsoft’s current pricing table and scaling rules. To get precise estimates by region and workload, use the Azure Pricing Calculator.

To maximize the effectiveness and performance of your Azure Search implementation, start with a well-structured index schema that reflects your data’s organization. Choose appropriate data types, configure fields for search, filtering, and faceting, and define custom scoring profiles to boost search relevance. Incorporate cognitive skills such as entity recognition, language detection, key phrase extraction, and OCR to enrich your data and improve the accuracy of search results.

Regularly monitor search usage and performance metrics through Azure Monitor, adjusting your pricing tier, replicas, and partitions as your needs evolve to maintain an optimal balance between cost and system resources. Keep your index schema and data current to align with changing business requirements, and optimize query patterns to deliver fast, relevant results. For production environments, use versioned fields instead of modifying them directly to avoid downtime during index rebuilds.

By following these best practices, you can ensure your Azure Search deployment remains robust, scalable, and aligned with your organization’s goals.

When problems occur in Azure Search, a structured troubleshooting process helps pinpoint and resolve issues quickly. Start by verifying the status and configuration of your search service in the Azure portal to confirm it’s operating as intended. Review your search index schema and underlying data for inconsistencies, missing fields, or errors that could impact search relevance or result accuracy.

Leverage the official Azure Search documentation and community forums for guidance on common issues and proven solutions. If you encounter unexpected search results or performance bottlenecks, refine your search queries, adjust indexing parameters, or experiment with scoring profiles. For complex or persistent issues, engage Azure Support for specialized assistance to restore optimal search functionality. Maintaining a proactive troubleshooting strategy ensures your Azure Search implementation continues to deliver reliable, high-quality results to your users.

If all else fails or if you don’t have the resources to manage these tasks, we can help you diagnose issues, optimize performance, and ensure your Azure Search deployment meets both technical and business goals.

Before deploying Azure AI Search, you need an Azure subscription. The selected tier determines the available features, scalability, and resource limits for your deployment.

Semantic Search Availability - Semantic ranking is only available on Standard (S1/S2/S3) and Storage Optimized (L1/L2) tiers. It must be explicitly enabled, and it isn’t supported in the Free or Basic tiers. Check the region where you plan to deploy, as availability can vary.

Vector Search Requirements - Vector search is supported on all billable tiers (Standard and Storage Optimized) but requires that you store embeddings in vector fields. Embeddings can be generated using Azure OpenAI, other ML models, or directly within Azure AI Search skillsets and indexers.

Index Build Time and Partitioning - Large indexes and those using AI enrichment can take significant time to build. Plan for indexing time and choose an appropriate number of partitions to distribute storage and indexing throughput. Incremental updates and scheduled indexer runs can help keep data fresh.

Query Complexity and Latency - Hybrid queries (keyword + semantic + vector) improve result quality but may increase response time. Complex scoring profiles or semantic rankers also add processing overhead. Test latency against expected query patterns, especially if supporting real-time applications.

Security and Access Control - Choose your access model: API keys or Microsoft Entra ID. For private networking, use Private Endpoints and Virtual Network (VNet) integration, available only on Standard and higher tiers. Ensure data is encrypted at rest and in transit. (Azure Security Controls)

Monitoring and Telemetry - Azure Monitor and built-in metrics provide insights into query volume, indexing performance, and error rates. You can also integrate with Log Analytics and Application Insights for alerting and deeper diagnostics.

Hybrid retrieval, semantic ranking, and vector search, combined with deep integration into Azure OpenAI and other Azure services, make Azure AI Search a strong fit for both LLM-powered solutions and enterprise search applications.

If you’re working to improve knowledge access, ground generative AI in trusted data, or deliver high-relevance discovery tools at scale, our team can help. At ITMAGINATION, we’ve been delivering AI and Machine Learning solutions since 2016, building a track record in aligning advanced technical capabilities with real-world business goals.

Over the past two years, we’ve expanded our generative AI and search expertise, delivering solutions that move beyond prototypes into secure, production-ready deployments with measurable impact.

Book a call with our experts to explore how Azure AI Search can be implemented in your environment to enhance discovery, improve relevance, and accelerate AI initiatives with confidence.

.avif)

.avif)

.avif)

.jpg)

.avif)

.jpg)

.jpg)